Bias, Power, and More on Confounds

What do we mean by bias?

For our purposes, let’s define it as:

consistent and systematic deviation from the expected norm stemming from a flaw in the design of study.

Order Effects

“The order of presenting the treatments affects the dependent variable.” (Cozby, 137)

(sometimes called sequence effects)

Recency Bias

People treat the most recently presented things differently from the others.

Primacy Bias

People treat the things presented first differently than they would the others.

How can we avoid this?

- RANDOMIZE, RANDOMIZE, RANDOMIZE.

Other options?

Sometimes simple randomization won’t work. Maybe there are repeated measures and you don’t want them to be presented back-to-back, for example. Or maybe the order is an effect that you’re actually interested in.

Other options?

A latin square design is sometimes a good way to go.

| First | Second | Third | Fourth |

|---|---|---|---|

| A | B | C | D |

| B | C | D | A |

| C | D | A | B |

How many [X] do I need?

This is probably the most commonly asked question. First we should cover a few preliminaries:

- Types of errors

- Effect Size

- Power

Consider the following

From Pollard and Richardson (1987):

- “If a person is American then they are probably not a member of Congress; this person is a member of Congress; therefore this person is probably not an American.”

Sensitivity

“Whenever you find a null result and it is interesting to you that the result is null, you should always indicate the sensitivity of your analysis.” (Dienes, p.68)

Power

Confidence Intervals

Finding an effect significantly different from another reference point.

Stopping Rules

-Neyman and Pearson would argue that you need to adequately define the conditions under which you will stop collecting data a priori.

- Believe it or not, anything can be “significant” if you just keep collecting data forever.

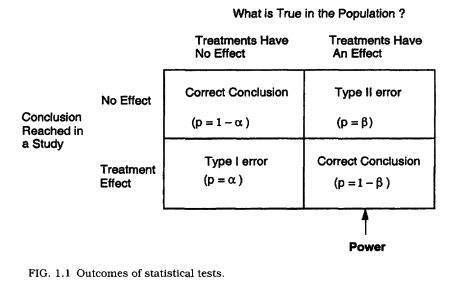

Types of Errors

Type I Error: Wrongly claiming something to be true, useful or knowable.

Type II Error: Wrongly claiming something to be false, useless or unknowable.

Types of Errors

- Type I error is when the null is true and we reject it.

- Type II error is when the null is false and we accept it.

Alpha and Beta

\(\alpha\) = The part of a distribution that is so extreme that we can reject the likelihood of it happening (usually in alignment with our maximum accepted p-value.)

- Another way of thinking about it is that \(\alpha\) is the long-term error rate for a specific type of error: saying that the null is false when it is true (see Dienes, p.62)

\(\beta\) = When the null is false, \(\beta\) is the proportion of times that we accept it, even though it’s false.

Types of Errors

types of errors

put another way…

- \(\alpha\) = \(P(rejecting H_0 \mid H_0)\)

- \(\beta\) = \(P(accepting H_0 \mid H_0 false)\)

An example

(from Dienes, p.63)

| Decision | \(\ H_0\) true | \(\ H_0\) false |

|---|---|---|

| Accept \(\ H_0\) | 3800 | 500 |

| Reject \(\ H_0\) | 200 | 500 |

| Totals | 4000 | 1000 |

- proportion of Type I errors shown when the null is true is 200/5000 (.05)

- But what if we define a type I error as ’the probability of a Type I error _when we have rejected the null’?

An Example

This means we consider only the cases when we have rejected the null. (200/700, or 29%)

The point: Using only a significance level of 5% does not guarantee that only 5% of all published significant results are an error.

\(\alpha\) and \(\beta\)

- Notice how \(\beta\) can be very different from \(\alpha\).

- Controlling one does not mean you have controlled the other.

- Power is defined as 1-\(\beta\).

- Put another way, power is:

- \(P(rejecting H_0 \mid H_0 false)\)

Ethics?

- the question for you is, is a Type II error as important as a Type I?

- If so, you could set both \(\alpha\) and \(\beta\) at .05 (power would be .95).

- If you think that type II errors are not quite as important, one could set the \(\beta\) higher, at something like .1, for example.

Steps for \(\beta\)

Estimate the size of effect that you think is interesting, given that your theory is true.

Estimate the amount of noise that your data will have.

- “The probability of detecting an effect depends not just on how big the effect is, but how much noise there is through which you are trying to detect the effect.” (Dienes, p.64)

What’s your stopping rule?

- Can you run as many participants as you’d like until you get a significant result?

What if you’d like to add tests or conditions?

If you plan it ahead of time, then you can stick with your original \(\alpha\).

if you would like to test other things, as you go, you need to correct for multiple tests.

- A standard (and conservative) approach to this is the Bonferroni correction, in which you divide \(\alpha\) by the number of tests. (so .05/2 for two tests, .05/3 for three tests, etc.).

Criticisms of Neyman-Pearson

(taken from Dienes, p.76)

- Inference consists of simple acceptance and rejection

- Null hypothesis testing encourages weak theorizing

- In the Neyman-Pearson approach it is important to know the reference class–we must know what endless series of trials but never did.